Press Releases

January 16, 2024

DOCOMO Develops World's First Technology Utilizing Generative AI to Automatically Generate Non-Player Characters in Metaverse

— Auto-generates behavior tree without the need for advanced programming —

TOKYO, JAPAN, January 16, 2024 --- NTT DOCOMO, INC. announced today that it has developed a generative AI that automatically generates non-player characters (NPCs), characters not controlled by the player, in the metaverse based solely on text input. The technology, which DOCOMO believes is the world's first of its kind,1 generates NPCs that embody distinct appearances, behaviors and roles in just 20 minutes,2 eliminating the need for specialized programming or algorithmic expertise.

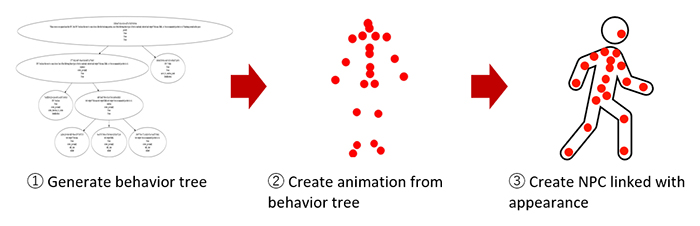

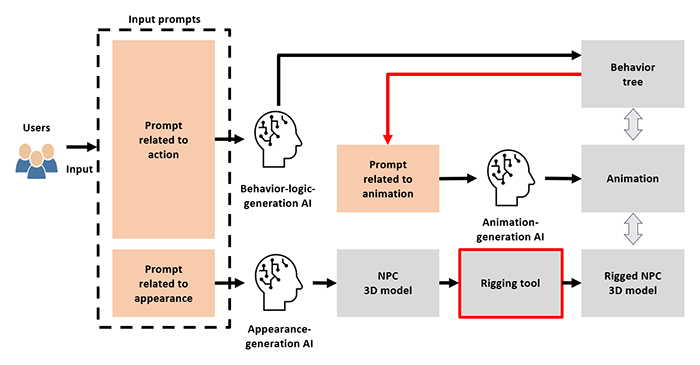

The technology integrates three generative AIs: behavior-logic-generative AI, animation-generative AI,3 and appearance-generative AI.4 The behavior-logic-generative AI and the seamless synchronization of all three types of AI are unique developments by DOCOMO; significant achievements that mark the new technology as cutting-edge.

Behavior-logic-generative AI automatically creates a unique behavior tree5 that defines the behavior of NPCs in the metaverse using only text. Creating a behavior tree until now has typically required skilled programmers to use code when formulating the required specifications.

In addition, automatic linkage of the three generative AIs makes it possible to link the behavior tree generated by the behavior-logic-generative AI, the NPC skeletal data generated by the animation-generative AI, and the 3D model of the NPC generated by the appearance-generative AI, thereby allowing the automatic generation of NPCs using only text.

The advancement of this technology is part of DOCOMO's Lifestyle Co-Creation Lab, working together with partners to evaluate life-enhancing technologies. Its objective is to craft an Innovation Co-Creation Platform accessible across various industries. Rooted in the concept of metacommunication, and aimed at fostering a new community identity, DOCOMO aims to integrate the technology with its Large Language Model (LLM) infrastructure and personal data resources.

Going forward, DOCOMO plans to further enhance this technology and implement it in the DOOR, metaverse operated by NTT QONOQ, INC. within the fiscal year ending in March 2025, with the goal of helping customers enjoy more convenient and enriched lives. Related technologies and services will be targeted to help support regional revitalization and development, among other applications.

This technology will be introduced during the “![]() docomo Open House '24” online event beginning on January 17.

docomo Open House '24” online event beginning on January 17.

- According to DOCOMO's research (as of December 2023). Patent pending by NTT DOCOMO, INC.

- Based on verification results of this project.

- Uses

Human Motion Diffusion Model technology.

Human Motion Diffusion Model technology. - Uses

Text2Mesh.

Text2Mesh. - A structure used to create NPC (Non-Player Character) behaviors, placed within a hierarchical tree to visually represent the character's thoughts and actions, making the flow of reasoning leading to actions visually comprehensible.

Appendix

Technology Overview

Goal

The new technology aims to create a more vibrant atmosphere within metaverses by applying aspects of crowd psychology through the virtual presence of bustling NPCs. It implements NPC behavior in an efficient and cost-effective manner and seamlessly connects behavior-logic-generative AI with designated animation-generative AI and appearance-generative AI.

Details of the technology

1. Behavior-logic-generative AI

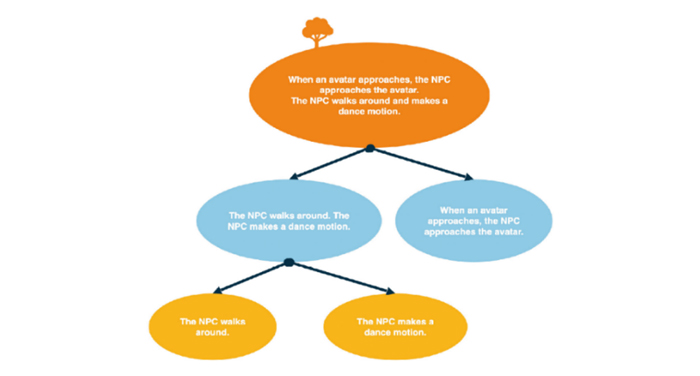

Behavior-logic generative AI generates parameters for each node1 by utilizing prompts, which it organizes into a structured behavior tree. Node parameters were generated using large language models (LLMs) leveraging the following innovations:

- Restricted to a single node parameter output

When producing parameters for each node throughout the entire tree structure using a single input/output with the LLM, the scale of the resultant behavior tree is restricted by the expressive capacity of the LLM. To overcome this limitation, the LLM's output is directed to a single node. - Inclusion of child prompts in LLM output

During the introduction of the LLM, the challenge of maintaining the tree structure while generating nodes arose when the processing unit was divided into individual nodes. This obstacle was resolved by appending a child prompt2 to the LLM's output, which was then used to generate parameters for the child node. This methodology ensures that both the parent and child node structures are preserved, allowing the creation of the complete behavior tree. These advances have made it possible to generate diverse NPC behavior logic independent of the expressiveness of the LLM.

2. Three generative AIs

Coordination among the three generative AIs is achieved through two primary links: the link between the behavior-logic-generative AI and the animation-generative AI, and the link between the animation-generative AI and the appearance-generative AI.

- Linkage between behavior-logic-generative and animation-generative AIs

By producing the necessary prompts to generate animation during the behavior-logic-generation process, the linkage is seamless, eliminating the need for separate prompts specifically for animation generation. - Linkage between animation-generative and appearance-generative AIs

A special tool (rigging tool) has been developed to automatically embed skeletal data that matches the animation output from the animation-generative AI into the NPC 3D model created by the appearance-generative AI.

Background

Despite the rapid growth of metaverses and increasing attention within the metaverse industry, reaching a substantial user base remains a challenge. To address this, NPCs have been introduced to add vitality to the space and attract users through crowd psychology.

Benefits

For 10 NPCs in a metaverse, conventionally it takes about 42 hours to create a behavior tree and link the behavior tree to NPC animations/appearances.3 The new technology dramatically reduces this time to about one hour, allowing for the rapid creation of a vibrant atmosphere in the metaverse, thereby addressing a critical challenge faced by metaverse providers.

Others

- Non-Player Characters

NPCs are entities that exist within a game or other metaverse environment and operate independently of player control. Today's metaverse NPCs are typically equipped with behavior-logic, animations and distinctive appearances to enhance the user's interactive experience. The development and configuration of an NPC's behavior logic, often represented by a behavior tree, typically requires manual effort. - Behavior Tree

A behavior tree is structured with intermediate nodes responsible for selecting NPC behaviors and end nodes that define those behaviors. By linking these nodes into a tree structure, a variety of behaviors can be expressed. Activation of the top node triggers the decision process, where actions are determined through interconnected intermediate nodes until the final terminal node is reached.

Within gaming, various techniques have emerged for articulating behavior-logic, which is central to the decision-making algorithms that drive the evolution of virtual agents. Adoption of the behavior tree has expanded significantly following its integration into numerous virtual agents after debuting in Halo 2 (2004).4 Renowned for its scalability compared to other methods, it has dominated the gaming behavior-logic market since 2011, currently enjoying a 70% market share, and continues to be widely used in the metaverse realm to define NPC behavior.

- A defined set of options for an NPC to decide on an action in response to a particular situation and the content of that action.

- Segmented and reconstructed prompts are utilized as inputs to derive parameters for the parent node in the system.

- Verified by experts.

- Game software for the Xbox released by Microsoft in 2004